Nginx Cache Failure

Astro

One of the problems with switching to Astro or really any other app based framework, is that most of them don’t support clustering. While I briefly covered installing Astro what I neglected to mention was the work I had to put into caching. This normally wouldn’t be a problem but I run a clustered setup with multiple web servers and an HA storage system. Applications are self contained and at the time of writing Astro cannot be configured for HA in the way other static sites can. Well we don’t want to contaminate the web servers themselves, so best to run it on its own node. That part was fine, but then what do we do when the node needs to go down for patching? Caching. Nginx has a powerful caching engine, and it just so happens most Java framework sites use it. Originally I looked at migrating to self hosted Ghost and learned early of this “limitation” . The reality is these are generally deployed to something like cloudflare or other page hosting services.

After some work I setup caching in Nginx and everything was fine. The key based architecture made it easy for each node to access and serve the static pages from shared storage and the timeouts allowed the primary node actually hosting Astro to go down for maintenance for patching, and the content would still be available.

Fast forward a few months and I have patched modified and other wise made adjustments to the architecture. One day when tabbing over to a previous article I wrote I noticed the page load was…slow. Not slow from a normal usage perspective, but slower than it had been normally.

X-Cache-Status MISS

Curious, I pulled up the dev tools to see what may be happening.

Hm, thats no good. Subsequent attempts were still misses. Thats a problem, it meant that each request was hitting the node itself. Lets take a closer look at the logs.

[crit] 1339#1339: *335 chmod() "/media/cache/a/63/8b4ef4815020e6e1c5552bc75375563a.0000000209" failed (1: Operation not permitted) while reading upstream, client: WAN, server: couchit.net, request: "GET / HTTP/2.0", upstream: "http://LAN", host: "couchit.net"Odd, ok it seems that in the nginx error.log we have tons of cache requests failing for permission issues. Lets see if its related, I was smart enough to write logging for the cache status when I first made it.

00:42:32 -0400] "HEAD /main HTTP/1.1" MISSWell thats not great, but it used to function fine. It was time to actually start testing. First I started with seeing if it was a user permission issue. To do that I had to attempt to read and write using the www-data user sudo -u www-data touch /media/cache/testfile No errors, lets try and set some permissions since it was using chmod sudo -u www-data chmod 644 /media/cache/testfile.

It worked absolutely fine. Thats not really what I wanted to see because then it would give me better clues. Now it was time to think of this from a higher level.

Storage Subsystems

Now that I knew I could read and write, something else had to be happening, now it was time to look at the tech stack in general. Remember I mentioned this was all HA so none of the data actually lived on the web servers, it was simply mounted it was time to look at the actual storage subsystem. I was able to chmod but there are a lot of permissions that go into storing a file besides who can read write. With this in mind I decided instead to look at the mount logic itself. The host FS is a REFS HA volume that I mount at boot.

//10.0.0.195/cache /media/cache cifs uid=www-data,gid=www-data,mfsymlinks,credentials=*.smbcredentials,iocharset=utf8,file_mode=**,dir_mode=**,x-systemd.automount 0 0This looked fine, but I did make modifications to this mount when I was making automation for the mount assignments in an effort to make the template more readable. I ended up landing on the following Ubuntu blog that lead me down the right rabbit hole. MountCifsFstab

I really fell on noperm and decided to dive more into CIFS flags. I ended up with noperm to disable permission checks on the client side. nounix to prevent the client from attempting to use UNIX extensions. Finally noserverino since I didn’t want my linux server to define inodes for cache on my windows volume.

I ended up with something like this

//10.0.0.195/cache /media/cache cifs uid=www-data,gid=www-data,noperm,nounix,noserverino,vers=3.0,mfsymlinks,credentials=*.smbcredentials,iocharset=utf8,file_mode=**,dir_mode=**,x-systemd.automount 0 0Now that I was cutting out some Unix style filesystem and file specific permissions and configurations a quick nginx restart on all my nodes gave me the following

Nice! That makes me a lot happier, but now that we’re here let’s take a look at the entire caching system and see if we can make any improvements, I’m here anyway.

Nginx Cache Configuration

No one expects Nginx Caching configurations (well this isn’t the full config or a setup guide but still). Now that we were getting hits, it kind of HIT me; that I should refresh myself on my caching logic. In my case while I did define a lock and a time out, I did not specify proxy_cache_lock_age.

proxy_cache_lock on;proxy_cache_lock_timeout 5s;proxy_cache_lock_age 10s;Nice, it’s safe to use a lock in an HA environment, I specified a timeout, and then finally the overall lock age. Of course none of this means much if I can’t see the failures which tipped me off in the first place. So we set this header ourselves, but remind me to get this into Grafana.

# Show cache result in headersadd_header X-Cache-Status $upstream_cache_status;Thats all great; we have a working cache system on shared storage and a header to easily check what the status is. However, I think I might want to add some abuse centric tricks. Security is an onion and while smart firewalls, nginx connection limits, hardware firewalls, etc are great and Nginx cache is meant to get beat on to protect the node, lets see what else we can do.

Protecting from rudimentary cache abuse

To do that I should explain how I handle caching on a higher level. In my case I make as many global definitions as possible. I have several sites, so making modifications to each one can be painful even with automation. In this case the caching configuration is inserted into each site by calling caching.conf.

I do this by defining caching.conf inside of the global nginx.conf. You can do this with other things like security configurations etc. Next is at the site level, when you define your site you simply add caching.conf to the definition.

server {

server_name mywebsite.net www.mywebsite.net;

location / { include /etc/nginx/includes/caching.conf; proxy_pass http://10.0.0.1:1234; }“Wait didn’t you say that you define it in nginx.conf?” Well you would be right, defining it in nginx.conf configures nginx globally, but for specific requests it needs to be set in the site definitions.

Anyway; now that you know how its configured it’s easier to explain the next part. Lets globally set some request limits then map them to a memory zone so nginx remembers who’s connected. These can be whatever you want depending on traffic and the ones shows are just examples. While we’re add it add some logging when limits are hit.

map $http_range $block_multi_range { default 0; "~*,.*" 1; }

# Remote rate limits limit_req_zone $binary_remote_addr zone=req_limit_per_ip:99m rate=150r/s; limit_conn_zone $binary_remote_addr zone=conn_limit_per_ip:99m;

# Log when limits are triggered limit_req_log_level warn; limit_conn_log_level warn;Great; now since we’re here let’s declare that we support byte ranges and enable it. This will let partial downloads happen, which can save a little bandwidth and make it easier on the client.

# Advertise support for clients add_header Accept-Ranges bytes;

# Enable byte-range support for proxied content proxy_set_header Range $http_range; proxy_set_header If-Range $http_if_range;Once last add to nginx.conf itself and then we can move on to caching.conf.

include /etc/nginx/includes/caching.conf;Alright; now lets try to implement some basic anti-abuse logic. These will need to be fine tuned on traffic, bandwidth, abuse rates, really your content and only you will know it. So take the following as an example just to show you where the numbers go.

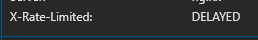

limit_req zone=req_limit_per_ip burst=60 delay=5;limit_conn conn_limit_per_ip 10;add_header X-Rate-Limited $limit_req_status;Lets walk through them. limit_req zone=req_limit_per_ip burst=60 delay=5 specifies the zone which we defined in nginx.conf (because you can define multiple). Burst=60 allows small surges up to in this case 60 requests for things like initial page loads. delay=5 serves the first 5 requests during bursts before delaying them.

Now limit_conn conn_limit_per_ip 10; is much more simple. It does what it says on the tin, each IP connecting has a limit of 10. Most browsers open several concurrent connections. This needs to be set at a sane value, but it doesn’t need to be super high and helps deter download bots and crawlers.

The final load test

After a quick Nginx restart it was time to start battering the site to see what we got. After a few refreshes, we went from MISS to HIT as the cache filled, but spamming subsequent pages especially those with lots of content like pictures net us this.

Nice! If we want to be even more nice, we can eventually stand up images or warnings in place of content to alert the user they are hitting limits and need to slow down. Though that is super rare as delayed means just that and the content finishes eventually.